The Transmission Control Protocol (TCP) and User Datagram Protocol (UDP) are the two most popular TCP/IP transport layer protocols. These TCP/IP protocols define a variety of functions considered to be OSI transport layer, or Layer 4, features. Some of the functions relate to things you see every day—for instance, when you open multiple web browsers on your PC, how does your PC know which browser to put the next web page in? When a web server sends you 500 IP packets containing the various parts of a web page, and 1 packet has errors, how does your PC recover the lost data? This chapter covers how TCP and UDP perform these two functions, along with the other functions performed by the transport layer.

The Transmission Control Protocol (TCP) and User Datagram Protocol (UDP) are the two most popular TCP/IP transport layer protocols. These TCP/IP protocols define a variety of functions considered to be OSI transport layer, or Layer 4, features. Some of the functions relate to things you see every day—for instance, when you open multiple web browsers on your PC, how does your PC know which browser to put the next web page in? When a web server sends you 500 IP packets containing the various parts of a web page, and 1 packet has errors, how does your PC recover the lost data? This chapter covers how TCP and UDP perform these two functions, along with the other functions performed by the transport layer.Foundation Topics

As in the last two chapters, this chapter starts with a general discussion of the functions of an OSI layer—in this case, Layer 4, the transport layer. Two specific transport layer protocols—the Transmission Control Protocol (TCP) and the User Datagram Protocol (UDP) are covered later in the chapter. This chapter covers OSI Layer 4 concepts, but mostly through an examination of the TCP and UDP protocols. So, this chapter briefly introduces OSI transport layer details and then dives right into how TCP works.

Typical Features of OSI Layer 4

The transport layer (Layer 4) defines several functions, the most important of which are error recovery and flow control. Routers discard packets for many reasons, including bit errors, congestion and instances in which no correct routes are known. As you have read already, most data-link protocols notice errors but then discard frames that have errors. The OSI transport layer might provide for retransmission (error recovery) and help to avoid congestion (flow control)—or it might not. It really just depends on the particular protocol. However, if error recovery or flow control is performed with the more modern protocol suites, the functions typically are performed with a Layer 4 protocol.

OSI Layer 4 includes some other features as well. Table 6-2 summarizes the main features of the OSI transport layer. You will read about the specific implementation of these protocols in the sections about TCP and UDP.

The Transmission Control Protocol

Each TCP/IP application typically chooses to use either TCP or UDP based on the application’s requirements. For instance, TCP provides error recovery, but to do so, it consumes more bandwidth and uses more processing cycles. UDP does not do error recovery, but it takes less bandwidth and uses fewer processing cycles. Regardless of which of the two TCP/IP transport layer protocols the application chooses to use, you should understand the basics of how each of the protocols works.

TCP provides a variety of useful features, including error recovery. In fact, TCP is best known for its error-recovery feature—but it does more. TCP, defined in RFC 793, performs the following functions:

■ Multiplexing using port numbers

■ Error recovery (reliability)

■ Flow control using windowing

■ Connection establishment and termination

■ End-to-end ordered data transfer

Segmentation

TCP accomplishes these functions through mechanisms at the endpoint computers. TCP relies on IP for end-to-end delivery of the data, including routing issues. In other words, TCP performs only part of the functions necessary to deliver the data between applications, and the role that it plays is directed toward providing services for the applications that sit at the endpoint computers. Regardless of whether two computers are on the same Ethernet, or are separated by the entire Internet, TCP performs its functions the same way.

Figure 6-1 shows the fields in the TCP header. Not all the fields are described in this text, but several fields are referred to in this section. The Cisco Press book, Internetworking Technologies Handbook , Fourth Edition, lists the fields along with brief explanations.

Multiplexing Using TCP Port Numbers

TCP provides a lot of features to applications, at the expense of requiring slightly more processing and overhead, as compared to UDP. However, TCP and UDP both use a concept called multiplexing . So, this section begins with an explanation of multiplexing with TCP and UDP. Afterward, the unique features of TCP and UDP are explored.

Multiplexing by TCP and UDP involves the process of how a computer thinks when receiving data. The computer might be running many applications, such as a web browser, an e-mail package, or an FTP client. TCP and UDP multiplexing enables the receiving computer to know which application to give the data to.

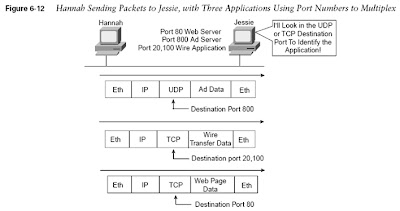

Some examples will help make the need for multiplexing obvious. The sample network consists of two PCs, labeled Hannah and Jessie. Hannah uses an application that she wrote to send advertisements that display on Jessie’s screen. The application sends a new ad to Jessie every 10 seconds. Hannah uses a second application, a wire-transfer application, to send Jessie some money. Finally, Hannah uses a web browser to access the web server that runs on Jessie’s PC. The ad application and wire-transfer application are imaginary, just for this example. The web application works just like it would in real life.

Figure 6-2 shows a figure of the example network, with Jessie running three applications:

• A UDP-based ad application

• A TCP-based wire-transfer application

• A TCP web server application

Jessie needs to know which application to give the data to, but all three packets are from the same Ethernet and IP address. You might think that Jessie could look at whether the packet contains a UDP or a TCP header, but, as you see in the figure, two applications (wire transfer and web) both are using TCP.

TCP and UDP solve this problem by using a port number field in the TCP or UDP header, respectively. Each of Hannah’s TCP and UDP segments uses a different destination port number so that Jessie knows which application to give the data to. Figure 6-3 shows an example.

Multiplexing relies on the use of a concept called a socket . A socket consists of three things: an IP address, a transport protocol, and a port number. So, for a web server application on Jessie, the socket would be (10.1.1.2, TCP, port 80) because, by default, web servers use the well-known port 80. When Hannah’s web browser connected to the web server, Hannah used a socket as well—possibly one like this: (10.1.1.1, TCP, 1030). Why 1030? Well, Hannah just needs a port number that is unique on Hannah, so Hannah saw that port 1030 was available and used it. In fact, hosts typically allocate dynamic port numbers starting at 1024 because the ports below 1024 are reserved for well-known applications, such as web services.

In Figure 6-3, Hannah and Jessie used three applications at the same time—hence, there were three socket connections open. Because a socket on a single computer should be unique, a connection between two sockets should identify a unique connection between two computers. The fact that each connection between two sockets is unique means that you can use multiple applications at the same time, talking to applications running on the same or different computers; multiplexing, based on sockets, ensures that the data is delivered to the correct applications. Figure 6-4 shows the three socket connections between Hannah and Jessie.

Port numbers are a vital part of the socket concept. Well-known port numbers are used by servers; other port numbers are used by clients. Applications that provide a service, such as FTP, Telnet, and web servers, open a socket using a well-known port and listen for connection requests. Because these connection requests from clients are required to include both the source and the destination port numbers, the port numbers used by the servers must be well known. Therefore, each server has a hard-coded, well-known port number, as defined in the well-known numbers RFC.

On client machines, where the requests originate, any unused port number can be allocated. The result is that each client on the same host uses a different port number, but a server uses the same port number for all connections. For example, 100 Telnet clients on the same host computer would each use a different port number, but the Telnet server with 100 clients connected to it would have only 1 socket and, therefore, only 1 port number. The combination of source and destination sockets allows all participating hosts to distinguish between the source and destination of the data. (Look to www.rfc-editor.org to find RFCs such as the well-known numbers RFC 1700.)

NOTE : You can find all RFCs online at www.isi.edu/in-notes/rfc xxxx .txt, where xxxx is the number of the RFC. If you do not know the number of the RFC, you can try searching by topic at www.rfc-editor.org/cgi-bin/rfcsearch.html.

Popular TCP/IP Applications

Throughout your preparation for the CCNA INTRO and ICND exams, you will come across a variety of TCP/IP applications. You should at least be aware of some of the applications that can be used to help manage and control a network.

The World Wide Web (WWW) application exists through web browsers accessing the content available on web servers, as mentioned earlier. While often thought of as an end-user application, you can actually use WWW to manage a router or switch by enabling a web server function in the router or switch, and using a browser to access the router or switch.

The Domain Name System (DNS) allows users to use names to refer to computers, with DNS being used to find the corresponding IP addresses. DNS also uses a client/server model, with DNS servers being controlled by networking personnel, and DNS client functions being part of most any device that uses TCP/IP today. The client simply asks the DNS server to supply the IP address that corresponds to a given name.

Simple Network Management Protocol (SNMP) is an application layer protocol used specifically for network device management. For instance, the Cisco Works network management software product can be used to query, compile, store, and display information about the operation of a network. In order to query the network devices, Cisco Works uses SNMP protocols.

Traditionally, in order to move files to and from a router or switch, Cisco used Trivial File Transfer Protocol (TFTP). TFTP defines a protocol for basic file transfer – hence the word “trivial” to start the name of the application. Alternately, routers and switches can use File Transfer Protocol (FTP), which is a much more functional protocol, for transferring files. Both work well for moving files into and out of Cisco devices. FTP allows many more features, making it a good choice for the general end-user population, whereas TFTP client and server applications are very simple, making them good tools as imbedded parts of networking devices.

Some of these applications use TCP, and some use UDP. As you will read later, TCP performs error recovery, whereas UDP does not. For instance, Simple Mail Transport Protocol (SMTP) and Post Office Protocol version 3 (POP3), both used for transferring mail, require guaranteed delivery, so they use TCP. Regardless of which transport layer protocol is used, applications use a well-known port number, so that clients know to which port to attempt to connect. Table 6-3 lists several popular applications and their well-known port numbers.

Error Recovery (Reliability)

TCP provides for reliable data transfer, which is also called reliability or error recovery , depending on what document you read. To accomplish reliability, TCP numbers data bytes using the Sequence and Acknowledgment fields in the TCP header. TCP achieves reliability in both directions, using the Sequence Number field of one direction combined with the Acknowledgment field in the opposite direction. Figure 6-5 shows the basic operation.

In Figure 6-5, the Acknowledgment field in the TCP header sent by the web client (4000) implies the next byte to be received; this is called forward acknowledgment . The sequence number reflects the number of the first byte in the segment. In this case, each TCP segment is 1000 bytes in length; the Sequence and Acknowledgment fields count the number of bytes.

Figure 6-6 depicts the same scenario, but the second TCP segment was lost or was in error. The web client’s reply has an ACK field equal to 2000, implying that the web client is expecting byte number 2000 next. The TCP function at the web server then could recover lost data by resending the second TCP segment. The TCP protocol allows for resending just that segment and then waiting, hoping that the web client will reply with an acknowledgment that equals 4000.

(Although not shown, the sender also sets a re-transmission timer, awaiting acknowledgment, just in case the acknowledgment is lost, or in case all transmitted segments are lost. If that timer expires, the TCP sender sends all segments again.)

Flow Control Using Windowing

TCP implements flow control by taking advantage of the Sequence and Acknowledgment fields in the TCP header, along with another field called the Window field. This Window field implies the maximum number of unacknowledged bytes allowed outstanding at any instant in time. The window starts small and then grows until errors occur. The window then “slides” up and down based on network performance, so it is sometimes called a sliding window . When the window is full, the sender will not send, which controls the flow of data.

Figure 6-7 shows windowing with a current window size of 3000. Each TCP segment has 1000 bytes of data.

Notice that the web server must wait after sending the third segment because the window is exhausted. When the acknowledgment has been received, another window can be sent. Because there have been no errors, the web client grants a larger window to the server, so now 4000 bytes can be sent before an acknowledgment is received by the server. In other words, the Window field is used by the receiver to tell the sender how much data it can send before it must stop and wait for the next acknowledgment. As with other TCP features, windowing is symmetrical—both sides send and receive, and, in each case, the receiver grants a window to the sender using the Window field.

Windowing does not require that the sender stop sending in all cases. If an acknowledgment is received before the window is exhausted, a new window begins and the sender continues to send data until the current window is exhausted. (The term, Positive Acknowledgement and Retransmission [PAR] , is sometimes used to describe the error recovery and windowing processes used by TCP.)

Connection Establishment and Termination

TCP connection establishment occurs before any of the other TCP features can begin their work. Connection establishment refers to the process of initializing sequence and acknowledgment fields and agreeing to the port numbers used. Figure 6-8 shows an example of connection establishment flow.

This three-way connection-establishment flow must complete before data transfer can begin. The connection exists between the two sockets, although there is no single socket field in the TCP header. Of the three parts of a socket, the IP addresses are implied based on the source and destination IP addresses in the IP header. TCP is implied because a TCP header is in use, as specified by the protocol field value in the IP header. Therefore, the only parts of the socket that need to be encoded in the TCP header are the port numbers.

TCP signals connection establishment using 2 bits inside the flag fields of the TCP header. Called the SYN and ACK flags, these bits have a particularly interesting meaning. SYN means “synchronize the sequence numbers,” which is one necessary component in initialization for TCP. The ACK field means “the acknowledgment field is valid in this header.” Until the sequence numbers are initialized, the acknowledgment field cannot be very useful. Also notice that in the initial TCP segment in Figure 6-8, no acknowledgment number is shown; this is because that number is not valid yet. Because the ACK field must be present in all the ensuing segments, the ACK bit continues to be set until the connection is terminated.

TCP initializes the Sequence Number and Acknowledgment Number fields to any number that fits into the 4-byte fields; the actual values shown in Figure 6-8 are simply example values. The initialization flows are each considered to have a single byte of data, as reflected in the Acknowledgment Number fields in the example.

Figure 6-9 shows TCP connection termination. This four-way termination sequence is straightforward and uses an additional flag, called the FIN bit . (FIN is short for “finished,” as you might guess.) One interesting note: Before the device on the right sends the third TCP segment in the sequence, it notifies the application that the connection is coming down.

It then waits on an acknowledgment from the application before sending the third segment in the figure. Just in case the application takes some time to reply, the PC on the right sends the second flow in the figure, acknowledging that the other PC wants to take down the connection. Otherwise, the PC on the left might resend the first segment over and over.

Connectionless and Connection-Oriented Protocols

The terms connection-oriented and connectionless have some relatively well-known connotations inside the world of networking protocols. The meaning of the terms is intertwined with error recovery and flow control, but they are not the same. So, first, some basic definitions are in order:

• Connection-oriented protocol —A protocol either that requires an exchange of messages before data transfer begins or that has a required pre-established correlation between two endpoints

• Connectionless protocol —A protocol that does not require an exchange of messages and that does not require a pre-established correlation between two endpoints.

TCP is indeed connection oriented because of the set of three messages that establish a TCP connection. Likewise, Sequenced Packet Exchange (SPX), a transport layer protocol from Novell, is connection oriented. When using permanent virtual circuits (PVCs), Frame Relay does not require any messages to be sent ahead of time, but it does require predefinition in the Frame Relay switches, establishing a connection between two Frame Relay–attached devices. ATM PVCs are also connection oriented, for similar reasons.

NOTE : Some documentation refers to the terms connected and connection-oriented .

These terms are used synonymously. You will most likely see the use of the term connection-oriented in Cisco documentation.Many people confuse the real meaning of connection-oriented with the definition of a reliable, or error-recovering, protocol. TCP happens to do both, but just because a protocol is connection-oriented does not mean that it also performs error recovery. Table 6-4 lists some popular protocols and tells whether they are connected or reliable.

Data Segmentation and Ordered Data Transfer

Applications need to send data. Sometimes the data is small—in some cases, a single byte. In other cases, for instance, with a file transfer, the data might be millions of bytes. Each different type of data link protocol typically has a limit on the maximum transmission unit ( MTU ) that can be sent. MTU refers to the size of the “data,” according to the data link layer—in other words, the size of the Layer 3 packet that sits inside the data field of a frame. For many data link protocols, Ethernet included, the MTU is 1500 bytes.

TCP handles the fact that an application might give it millions of bytes to send by segmenting the data into smaller pieces, called segments . Because an IP packet can often be no more than 1500 bytes, and because IP and TCP headers are 20 bytes each, TCP typically segments large data into 1460 byte (or smaller) segments.

The TCP receiver performs re-ssembly when it receives the segments. To reassemble the data, TCP must recover lost segments, as was previously covered. However, the TCP receiver must also reorder segments that arrive out of sequence. Because IP routing can choose to balance traffic across multiple links, the actual segments may be delivered out of order. So, the TCP receiver also must perform ordered data transfer by reassembling the data into the original order. The process is not hard to imagine: If segments arrive with the sequence numbers 1000, 3000, and 2000, each with 1000 bytes of data, the receiver can reorder them and no retransmissions are required.

You should also be aware of some terminology related to TCP segmentation. The TCP header, along with the data field, together are called a TCP segment . This term is similar to a data link frame and an IP packet, in that the terms refer to the headers and trailers for the respective layers, plus the encapsulated data. The term L4PDU also can be used instead of the term TCP segment because TCP is a Layer 4 protocol.

TCP Function Summary

Table 6-5 summarizes TCP functions

The User Datagram Protocol

UDP provides a service for applications to exchange messages. Unlike TCP, UDP is connectionless and provides no reliability, no windowing, and no reordering of the received data. However, UDP provides some functions of TCP, such as data transfer, segmentation, and multiplexing using port numbers, and it does so with fewer bytes of overhead and with less processing required.

UDP multiplexes using port numbers in an identical fashion to TCP. The only difference in UDP (compared to TCP) sockets is that, instead of designating TCP as the transport protocol, the transport protocol is UDP. An application could open identical port numbers on the same host but use TCP in one case and UDP in the other—that is not typical, but it certainly is allowed. If a particular service supports both TCP and UDP transport, it uses the same value for the TCP and UDP port numbers, as shown in the assigned numbers RFC (currently RFC 1700—see www.isi.edu/in-notes/rfc1700.txt).

UDP data transfer differs from TCP data transfer in that no reordering or recovery is accomplished. Applications that use UDP are tolerant of the lost data, or they have some application mechanism to recover lost data. For example, DNS requests use UDP because the user will retry an operation if the DNS resolution fails. The Network File System (NFS), a remote file system application, performs recovery with application layer code, so UDP features are acceptable to NFS.

Table 6-6 contrasts typical transport layer functions as performed (or not performed) by UDP or TCP.

Figure 6-10 shows TCP and UDP header formats. Note the existence of both Source Port and Destination Port fields in the TCP and UDP headers, but the absence of Sequence Number and Acknowledgment Number fields in the UDP header. UDP does not need these fields because it makes no attempt to number the data for acknowledgments or resequencing.

UDP gains some advantages over TCP by not using the Sequence and Acknowledgment fields. The most obvious advantage of UDP over TCP is that there are fewer bytes of overhead. Not as obvious is the fact that UDP does not require waiting on acknowledgments or holding the data in memory until it is acknowledged. This means that UDP applications are not artificially slowed by the acknowledgment process, and memory is freed more quickly.

Foundation Summary

The “Foundation Summary” section of each chapter lists the most important facts from the chapter. Although this section does not list every fact from the chapter that will be on your CCNA exam, a well-prepared CCNA candidate should know, at a minimum, all the details in each “Foundation Summary” section before going to take the exam.

The terms connection-oriented and connectionless have some relatively well-known connotations inside the world of networking protocols. The meaning of the terms is intertwined with error recovery and flow control, but they are not the same. Some basic definitions are in order:

• Connection-oriented protocol —A protocol either that requires an exchange of messages before data transfer begins or that has a required pre-established correlation between two endpoints

• Connectionless protocol —A protocol that does not require an exchange of messages and that does not require a pre-established correlation between two endpoints

Figure 6-11 shows an example of windowing.

TCP and UDP multiplex between different applications using the port source and destination number fields. Figure 6-12 shows an example.

Figure 6-13 depicts TCP error recovery.

Figure 6-14 shows an example of a TCP connection-establishment flow.

Table 6-7 contrasts typical transport layer functions as performed (or not performed) by UDP or TCP.

=====================================

Quiz6: Fundamental of TCP & UDP

1)

(1 marks)

How many valid host IP addresses does each Class B network contain?

16,777,214

16,777,216

65,536

65,534

65,532

32,768

32,766

32,764

Leave blank

2)

(1 marks)

Which term is defined by the following phrase: “the type of protocol that is being forwarded when routers perform routing.”

Routed protocol

Routing protocol

RIP

IOS

Route protocol

Leave blank

3)

(1 marks)

What is the range for the values of the first octet for Class A IP networks?

0 to 127

0 to 126

1 to 127

1 to 126

128 to 191

128 to 192

Leave blank

4)

(1 marks)

PC1 and PC2 are on two different Ethernets that are separated by an IP router. PC1’s IP address is 10.1.1.1, and no subnetting is used. Which of the following addresses could be used for PC2?

10.1.1.2

10.2.2.2

10.200.200.1

9.1.1.1

225.1.1.1

1.1.1.1

Leave blank

5)

(1 marks)

How many valid host IP addresses does each Class C network contain?

65,536

65,534

65,532

32,768

32,766

256

254

Leave blank

0 comments:

Post a Comment