As you learned in the previous chapter, OSI Layers 1 and 2 map closely to the network interface layer of TCP/IP. In this chapter, you will learn more details about the functions of each of the two lowest layers in the OSI reference model, with specific coverage of Ethernet local-area networks (LANs).

As you learned in the previous chapter, OSI Layers 1 and 2 map closely to the network interface layer of TCP/IP. In this chapter, you will learn more details about the functions of each of the two lowest layers in the OSI reference model, with specific coverage of Ethernet local-area networks (LANs). The introduction to this book mentioned that the INTRO exam covers some topics lightly and covers others to great depth. As implied in the title, this chapter hits the fundamentals of Ethernet, paving the way for deeper coverage of other topics later in the book. Chapter 9, “Cisco LAN Switching Basics,” and Chapter 10, “Virtual LANs and Trunking,” delve into a much deeper examination of LAN switches and virtual LANs. Chapter 11, “LAN Cabling, Standards, and Topologies,” increases your breadth of knowledge about Ethernet, including a lot of broad details about Ethernet standards, cabling, and topologies—all of which can be on the exam.

Foundation Topics

Ethernet is the undisputed king of LAN standards today. Fifteen years ago, people wondered whether Ethernet or Token Ring would become win the battle of the LANs. Eight years ago, it looked like Ethernet would win that battle, but it might lose to an upstart called Asynchronous Transfer Mode (ATM) in the LAN. Today when you think of LANs, no one even questions what type—it’s Ethernet.

Ethernet has remained a viable LAN option for many years because it has adapted to the changing needs of the marketplace while retaining some of the key features of the original protocols. From the original commercial specifications that transferred data 10 megabits per second (Mbps) to the 10 gigabits per second (Gbps) rates today, Ethernet has evolved and become the most prolific LAN protocol ever.

Ethernet defines both Layer 1 and Layer 2 functions, so this chapter starts with some basic concepts in relation to OSI Layers 1 and 2. After that, the three earliest Ethernet standards are covered, focusing on the physical layer details. Next, this chapter covers data link layer functions, which are common among all the earlier Ethernet standards as well as the newer standards. Finally, two of the more recent standards, Fast Ethernet and Gigabit Ethernet, are introduced.

OSI Perspectives on Local-Area Networks

The OSI physical and data link layers work together to provide the function of delivery of data across a wide variety of types of physical networks. Some obvious physical details must be agreed upon before communication can happen, such as the cabling, the types of connectors used on the ends of the cables, and voltage and current levels used to encode a binary 0 or 1.

The data link layer typically provides functions that are less obvious at first glance. For instance, it defines the rules (protocols) to determine when a computer is allowed to use the physical network, when the computer should not use the network, and how to recognize errors that occurred during transmission of data. Part II, “Operating Cisco Devices,” and Part III, “LAN Switching,” cover a few more details about Ethernet Layers 1 and 2.

Typical LAN Features for OSI Layer 1

The OSI physical layer, or Layer 1, defines the details of how to move data from one device to another. In fact, many people think of OSI Layer 1 as “sending bits.” Higher layers encapsulate the data and decide when and what to send. But eventually, the sender of the data needs to actually transmit the bits to another device. The OSI physical layer defines the standards used to send and receive bits across a physical network.

To keep some perspective on the end goal, consider the example of the web browser requesting a web page from the web server. Figure 3-1 reminds you of the point at which Bob has built the HTTP, TCP, IP, and Ethernet headers, and is ready to send the data to R2.

In the figure, Bob’s Ethernet card uses the Ethernet physical layer specifications to transmit the bits shown in the Ethernet frame across the physical Ethernet. The OSI physical layer and its equivalent protocols in TCP/IP define all the details that allow the transmission of the bits from one device to the next. For instance, the physical layer defines the details of cabling— the maximum length allowed for each type of cable, the number of wires inside the cable, the shape of the connector on the end of the cable, and other details. Most cables include several conductors (wires) inside the cable; the endpoint of these wires, which end inside the connector, are called pins. So, the physical layer also must define the purpose of each pin, or wire. For instance, on a standard Category 5 (CAT5) unshielded twisted-pair (UTP) Ethernet cable, pins 1 and 2 are used for transmitting data by sending an electrical signal over the wires; pins 3 and 6 are used for receiving data. Figure 3-2 shows an example Ethernet cable, with a couple of different views of the RJ-45 connector.

The picture on the left side of the figure shows a Regulated Jack 45 (RJ-45) connector, which is a typical connector used with Ethernet cabling today. The right side shows the pins used on the cable when supporting some of the more popular Ethernet standards. One pair of wires is used for transmitting data, using pins 1 and 2, and another pair is used for receiving data, using pins 3 and 6. The Ethernet shown between Bob and R2 in Figure 3-1 could be built with cables, using RJ-45 connectors, along with hubs or switches. (Hubs and switches are defined later in this chapter.)

The cable shown in Figure 3-2 is called a straight-through cable. A straight-through cable connects pin 1 on one end of the cable with pin 1 on the other end, pin 2 on one end to pin 2 on the other, and so on. If you hold the cable so that you compare both connectors side by side, with the same orientation for each connector, you should see the same color wires for each pin with a straight-through cable.

One of the things that surprises people who have never thought about network cabling is the fact that many cables use two wires for transmitting data and that the wires are twisted around each other inside the cable. When two wires are twisted inside the cable, they are called a twisted pair (ingenious name, huh?). By twisting the wires, the electromagnetic interference caused by the electrical current is greatly reduced. So, most LAN cabling uses two twisted pairs—one pair for transmitting and one for receiving.

The OSI physical layer and its equivalent protocols in TCP/IP define all the details that allow the transmission of the bits from one device to the next. In later sections of this chapter, you will learn more about the specific physical layer standards for Ethernet. Table 3-2 summarizes the most typical details defined by physical layer protocols.

Typical LAN Features for OSI Layer 2

OSI Layer 2, the data link layer, defines the standards and protocols used to control the transmission of data across a physical network. If you think of Layer 1 as “sending bits,” you can think of Layer 2 as meaning “knowing when to send the bits, noticing when errors occurred when sending bits, and identifying the computer that needs to get the bits.” Similar to the section about the physical layer, this short section describes the basic data link layer functions. Later, you will read about the specific standards and protocols for Ethernet.

Data link protocols perform many functions, with a variety of implementation details. Because each data link protocol “controls” a particular type of physical layer network, the details of how a data link protocol works must include some consideration of the physical network. However, regardless of the type of physical network, most data link protocols perform the following functions:

• Arbitration—Determines when it is appropriate to use the physical medium

• Addressing—Ensures that the correct recipient(s) receives and processes the data that is sent

• Error detection—Determines whether the data made the trip across the physical medium successfully

• Identification of the encapsulated data—Determines the type of header that follows the data link header

Data Link Function 1: Arbitration

Imagine trying to get through an intersection in your car when all the traffic signals are out— you all want to use the intersection, but you had better use it one at a time. You finally get through the intersection based on a lot of variables—on how tentative you are, how big the other cars are, how new or old your car is, and how much you value your own life! Regardless, you cannot allow cars from every direction to enter the intersection at the same time without having some potentially serious collisions.

With some types of physical networks, data frames can collide if devices can send any time they want. When frames collide in a LAN, the data in each frame is corrupted and the LAN is unusable for a brief moment—not too different from a car crash in the middle of an intersection. The specifications for these data-link protocols define how to arbitrate the use of the physical medium to avoid collisions, or at least to recover from the collisions when they occur.

Ethernet uses the carrier sense multiple access with collision detection (CSMA/CD) algorithm for arbitration. The CSMA/CD algorithm is covered in the upcoming section on Ethernet.

Data Link Function 2: Addressing

When I sit and have lunch with my friend Gary, and just Gary, he knows I am talking to him.I don’t need to start every sentence by saying “Hey, Gary….” Now imagine that a few other people join us for lunch—I might need to say something like “Hey, Gary…” before saying something so that Gary knows I’m talking to him. Data-link protocols define addresses for the same reasons. Many physical networks allow more than two devices attached to the same physical network. So, data-link protocols define addresses to make sure that the correct device listens and receives the data that is sent. By putting the correct address in the data-link header, the sender of the frame can be relatively sure that the correct receiver will get the data. It’s just like sitting at the lunch table and having to say “Hey Gary…” before talking to Gary so that he knows you are talking to him and not someone else.

Each data-link protocol defines its own unique addressing structure. For instance, Ethernet uses Media Access Control (MAC) addresses, which are 6 bytes long and are represented as a 12-digit hexadecimal number. Frame Relay typically uses a 10-bit-long address called a data-link connection identifier (DLCI)—notice that the name even includes the phrase data link. This chapter covers the details of Ethernet addressing. You will learn about Frame Relay addressing in the CCNA ICND Exam Certification Guide.

Data Link Function 3: Error Detection

Error detection discovers whether bit errors occurred during the transmission of the frame. To do this, most data-link protocols include a frame check sequence (FCS) or cyclical redundancy check (CRC) field in the data-link trailer. This field contains a value that is the result of a mathematical formula applied to the data in the frame. An error is detected when the receiver plugs the contents of the received frame into a mathematical formula. Both the sender and the receiver of the frame use the same calculation, with the sender putting the results of the formula in the FCS field before sending the frame. If the FCS sent by the sender matches what the receiver calculates, the frame did not have any errors during transmission.

Error detection does not imply recovery; most data links, including IEEE 802.5 Token Ring and 802.3 Ethernet, do not provide error recovery. The FCS allows the receiving device to notice that errors occurred and then discard the data frame. Error recovery, which includes the resending of the data, is the responsibility of another protocol. For instance, TCP performs error recovery, as described in Chapter 6, “Fundamentals of TCP and UDP.”

Data Link Function 4: Identifying the Encapsulated Data

Finally, the fourth part of a data link identifies the contents of the Data field in the frame. Figure 3-3 helps make the usefulness of this feature apparent. The figure shows a PC that uses both TCP/IP to talk to a web server and Novell IPX to talk to a Novell NetWare server.

When PC1 receives data, should it give the data to the TCP/IP software or the NetWare client software? Of course, that depends on what is inside the Data field. If the data came from the Novell server, PC1 hands off the data to the NetWare client code. If the data comes from the web server, PC1 hands it off to the TCP/IP code. But how does PC1 make this decision? Well, IEEE Ethernet 802.2 Logical Link Control (LLC) uses a field in its header to identify the type of data in the Data field. PC1 examines that field in the received frame to decide whether the packet is an IP packet or an IPX packet.

Each data-link header has a field, generically with a name that has the word Type in it, to identify the type of protocol that sits inside the frame’s data field. In each case, the Type field has a code that means IP, IPX, or some other designation, defining the type of protocol header that follows.

Early Ethernet Standards

Now that you have a little better understanding of some of the functions of physical and data link standards, the next section focuses on Ethernet in particular. This chapter covers some of the basics, while Chapters 9 through 11 cover the topics in more detail.

In this section of the chapter, you learn about the three earliest types of Ethernet networks. The term Ethernet refers to a family of protocols and standards that together define the physical and data link layers of the world’s most popular type of LAN. Many variations of Ethernet exist; this section covers the functions and protocol specifications for the more popular types of Ethernet, including 10BASE-T, Fast Ethernet, and Gigabit Ethernet. Also, to help you appreciate how some of the features of Ethernet work, this section covers historical knowledge on two older types of Ethernet, 10BASE2 and 10BASE5 Ethernet.

Standards Overview

Like most protocols, Ethernet began life inside a corporation that was looking to solve a specific problem. Xerox needed an effective way to allow a new invention, called the personal computer, to be connected in its offices. From that, Ethernet was born. (Look at inventors.about.com/library/weekly/aa111598.htm for an interesting story on the history of Ethernet.) Eventually, Xerox teamed with Intel and Digital Equipment Corp. (DEC) to further develop Ethernet, so the original Ethernet became known as DIX Ethernet, meaning DEC, Intel, and Xerox.

The IEEE began creating a standardized version of Ethernet in February 1980, building on the work performed by DEC, Intel, and Xerox. The IEEE Ethernet specifications that match OSI Layer 2 were divided into two parts: the Media Access Control (MAC) and Logical Link Control (LLC) sublayers. The IEEE formed a committee to work on each part—the 802.3 committee to work on the MAC sublayer, and the 802.2 committee to work on the LLC sublayer.

Table 3-3 lists the various protocol specifications for the original three IEEE LAN standards, plus the original prestandard version of Ethernet.

The Original Ethernet Standards: 10BASE2 and 10BASE5

Ethernet is best understood by first considering the early DIX Ethernet specifications, called 10BASE5 and 10BASE2. These two Ethernet specifications defined the details of the physical layer of early Ethernet networks. (10BASE2 and 10BASE5 differ in the cabling details, but for the discussion included in this chapter, you can consider them as behaving identically.) With these two specifications, the network engineer installs a series of coaxial cables, connecting each device on the Ethernet network—there is no hub, switch, or wiring panel. The Ethernet consists solely of the collective Ethernet cards in the computers and the cabling. The series of cables creates an electrical bus that is shared among all devices on the Ethernet. When a computer wants to send some bits to another computer on the bus, it sends an electrical signal, and the electricity propagates to all devices on the Ethernet.

Because it is a single bus, if two or more signals were sent at the same time, the two would overlap and collide, making both signals unintelligible. So, not surprisingly, Ethernet also defined a specification for how to ensure that only one device sends traffic on the Ethernet at one time otherwise, the Ethernet would have been unusable. The algorithm, known as the carrier sense multiple access with collision detection (CSMA/CD) algorithm, defines how the bus is accessed. In human terms, CSMA/CD is similar to what happens in a meeting room with many attendees. Some people talk much of the time.

Some do not talk, but they listen. Others talk occasionally. Being humans, it’s hard to understand what two people are saying at the same time, so generally, one person is talking and the rest are listening. Imagine that Bob and Larry both want to reply to the current speaker’s comments. As soon as the speaker takes a breath, Bob and Larry might both try to speak. If Larry hears Bob’s voice before Larry actually makes a noise, Larry might stop and let Bob speak. Or, maybe they both start at almost the same time, so they talk over each other and many others in the room can’t hear what was said. Then there’s the proverbial “Excuse me, you talk next,” and eventually Larry or Bob talks. Or, in some cases, another person jumps in and talks while Larry and Bob are both backing off. These “rules” are based on your culture; CSMA/CD is based on Ethernet protocol specifications and achieves the same type of goal.

The solid lines in the figure represent the physical network cabling. The dashed lines with arrows represent the path that Larry’s transmitted frame takes. Larry sends a signal across out his Ethernet card onto the cable, and both Bob and Archie receive the signal. The cabling creates a physical electrical bus, meaning that the transmitted signal is received by all stations on the LAN. Just like a school bus stops at everyone’s house along a route, the electrical signal on a 10BASE2 or 10BASE5 network is propagated to each station on the LAN.

Because the transmitted electrical signal travels along the entire length of the bus, when two stations send at the same time, a collision occurs. The collision first occurs on the wire, and then some time elapses before the sending stations hear the collision—so technically, the stations send a few more bits before they actually notice the collision. CSMA/CD logic helps prevent collisions and also defines how to act when a collision does occur. The CSMA/CD algorithm works like this: So, all devices on the Ethernet need to use CSMA/CD to avoid collisions and to recover when inadvertent collisions occur.

Repeaters

Like any type of network, 10BASE5 and 10BASE2 had limitations on the total length of a cable. With 10BASE5, the limit was 500 m; with 10BASE2, it was 185 m. Interestingly, these two types of Ethernet get their name from the maximum segment lengths—if you think of 185 m as being close to 200 m, then the last digit of the names defines the multiple of 100 m that is the maximum length of a segment. That’s really where the 5 and the 2 came from in the names.

In some cases, the length was not enough. So, a device called a repeater was developed. One of the problems with using longer segment lengths was that the signal sent by one device could attenuate too much if the cable was longer that 500 m or 185 m, respectively. Attenuation means that when electrical signals pass over a wire, the strength of the signal gets smaller the farther along the cable it travels. It’s the same concept behind why you can hear someone talking right next to you, but if that person speaks at the same volume and you are across the room, you might not hear her because the sound waves have attenuated.

Repeaters allow multiple segments to be connected by taking an incoming signal, interpreting the bits as 1s and 0s, and generating a brand new, clean signal. A repeater does not simply amplify the signal because amplifying the signal might also amplify any noise picked up along the way.

NOTE : Because the repeater does not interpret what the bits mean, but does examine and generate electrical signals, a repeater is considered to operate at Layer 1.

So, why all this focus on standards for Ethernets that you will never work with? Well, these older standards provide a point of comparison to how things work today, with several of the features of these two early standards being maintained today. Now, on to an Ethernet standard that is still found occasionally in production networks today—10BASE-T.

10BASE-T Ethernet

10BASE-T solved several problems with the early Ethernet specifications. 10BASE-T allowed the use of telephone cabling that was already installed, or simply allowed the use of cheaper, easier-to-install cabling when new cabling was required. 10BASE-T networks make use of devices called hubs, as shown in Figure 3-5.

The physical 10BASE-T Ethernet uses Ethernet cards in the computers, cabling, and a hub. The hubs used to create a 10BASE-T Ethernet are essentially multiport repeaters. That means that the hub simply regenerates the electrical signal that comes in one port and sends the same signal out every other port. By doing so, 10BASE-T creates an electrical bus, just like 10BASE2 and 10BASE5. Therefore, collisions can still occur, so CSMA/CD access rules continue to be used.

The use of 10BASE-T hubs gives Ethernet much higher availability compared with 10BASE2 and 10BASE5 because a single cable problem could, and probably did, take down those types of LANs. With 10BASE-T, a cable is run from each device to a hub, so a single cable problem affects only one device.

The concept of cabling each device to a central hub, with that hub creating the same electrical bus as in the older types of Ethernet, was a core fact of 10BASE-T Ethernet. Because hubs continued the concept and physical reality of a single electrical path that is shared by all devices, today we call this shared Ethernet: All devices are sharing a single 10-Mbps bus.

A variety of terms can be used to describe the topology of networks. The term star refers to a network with a center, with branches extended outward—much like how a child might draw a picture of a star. 10BASE-T network cabling uses a star topology, as seen in Figure 3- 5. However, because the hub repeats the electrical signal out every port, the effect is that the

network acts like a bus topology. So, 10BASE-T networks are a physical star network design, but also a logical bus network design. (Chapter 11 covers the types of topologies and their meaning in more depth.)

Ethernet 10BASE-T Cabling

The PCs and hub in Figure 3-5 typically use Category 5 UTP cables with RJ-45 connectors, as shown in Figure 3-2. The Ethernet cards in each PC have an RJ-45 connector, as does the hub; these connectors are larger versions of the same type of connector used for telephone cords between a phone and the wall plate in the United States. So, connecting the Ethernet cables is as easy as plugging in a new phone at your house.

The details behind the specific cable used to connect to the hub are important in real life as well as for the INTRO exam. The detailed specifications are covered in Chapter 11, and the most typical standards are covered here. You might recall that Ethernet specifies that the pair of wires on pins 1 and 2 is used to transmit data, and pins 3 and 6 are used for receiving data. The PC Ethernet cards do indeed use the pins in exactly that way.

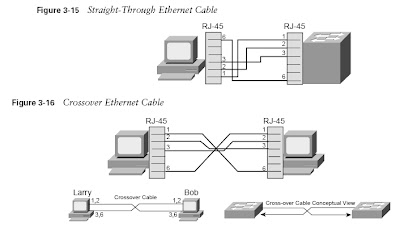

The cable used to connect the PCs to the hub is called a straight-through cable, as shown back in Figure 3-2. In a straight-through cable, the wire connected to pin 1 on one end of the cable is connected to pin 1 on the other side, pin 2 is connected to pin 2 on the other end, and so on. Therefore, when Larry sends data on the pair on pins 1 and 2, the hub receives the electrical signal over the straight-through cable on pins 1 and 2. So, for the hub to correctly receive the data, the hub must think oppositely, as compared to the PC—in other words, the hub receives data on pins 1 and 2, and transmits on pins 3 and 6. Figure 3-6 outlines how it all works.

For example, Larry might send data on pins 1 and 2, with the hub receiving the signal on pins 1 and 2. The hub then repeats the electrical signal out the other ports, sending the signal to Archie and Bob. The hub transmits the signal on pins 3 and 6 on the cables connected to Archie and Bob because Archie and Bob expect to receive data on pins 3 and 6.

In some cases, you need to cable two devices directly together with Ethernet, but both devices use the same pair for transmitting data. For instance, you might want to connect two hubs, and each hub transmits on pins 3 and 6, as just mentioned. Similarly, you might want to create a small Ethernet between two PCs simply by cabling the two PCs together—but both PCs use pins 1 and 2 for transmitting data. To solve this problem, you use a special cable called a crossover cable. Instead of pin 1 on one end of the cable being the same wire as pin 1 on the other end of the cable, pin 1 on one end of the cable becomes pin 3 on the other end. Similarly, pin 2 is connected to pin 6 at the other end, pin 3 is connected to pin 1, and pin 6 is connected to pin 2. Figure 3-7 shows an example with two PCs connected and a crossover cable.

Both Bob and Larry can transmit on pins 1 and 2—which is good because that’s the only thing an Ethernet card for an end user computer can do. Because pins 1 and 2 at Larry connect to pins 3 and 6 at Bob, and because Bob receives frames on pins 3 and 6, the receive function works as well. The same thing happens for frames sent by Bob to Larry—Bob sends on his pins 1 and 2, and Larry receives on pins 3 and 6.

Most of the time, you will not actually connect two computers directly with an Ethernet cable. However, you typically will use crossover cables for connections between switches and hubs. An Ethernet cable between two hubs or switches often is called a trunk. Figure 3-8 shows a typical network with two switches in each building and the typical cable types used for each connection.

10BASE-T Hubs

Compared to 10BASE2 and 10BASE5, hubs solved some cabling and availability problems. However, the use of hubs allowed network performance to degrade as utilization increased, just like when 10BASE2 and 10BASE5 were used, because 10BASE-T still created a single electrical bus shared among all devices on the LAN. Ethernets that share a bus cannot reach 100 percent utilization because of collisions and the CSMA/CD arbitration algorithm. To solve the performance problems, the next step was to make the hub smart enough to ensure that collisions simply did not happen—which means that CSMA/CD would no longer be needed. First, you need a deeper knowledge of 10BASE-T hubs before the solution to the congestion problem becomes obvious. Figure 3-9 outlines the operation of half-duplex 10BASE-T with hubs.

The figure details how the hub works, with one device sending and no collision. If PC1 and PC2 sent a frame at the same time, a collision would occur. At Steps 4 and 5, the hub would forward both electrical signals, which would cause the overlapping signals to be sent to all the NICs. So, because collisions can occur, CSMA/CD logic still is needed to have PC1 and PC2 wait and try again.

NOTE : PC2 would sense a collision because of its loopback circuitry on the NIC. The hub does not forward the signal that PC2 sent to the hub back to PC2. Instead, each NIC loops the frame that it sends back to its own receive pair on the NIC, as shown in Step 2 of the figure. Then, if PC2 is sending a frame and PC1 also sends a frame at the same time, the signal sent by PC1 is forwarded by the hub to PC2 on PC2’s receive pair. The incoming signal from the hub, plus the looped signal on PC2’s NIC, lets PC2 notice that there is a collision. Who cares? Well, to appreciate full-duplex LAN operation, you need to know about the NIC’s loopback feature.

Performance Issues: Collisions and Duplex Settings

10BASE2, 10BASE5, and 10BASE-T Ethernet would not work without CSMA/CD. However, because of the CSMA/CD algorithm, Ethernet becomes more inefficient under higher loads. In fact, during the years before LAN switches made these types of phenomena go away, the rule of thumb was that an Ethernet began to degrade when the load began to exceed 30 percent utilization. In the next section, you will read about two things that have improved network performance, both relating to the reduction or even elimination of collisions: LAN switching and full- duplex Ethernet.

Reducing Collisions Through LAN Switching

The term collision domain defines the set of devices for which their frames could collide. All devices on a 10BASE2, 10BASE5, or 10BASE-T network using a hub risk collisions between the frames that they send, so all devices on one of these types of Ethernet networks are in the same collision domain. For instance, all the devices in Figure 3-9 are in the same collision domain.

LAN switches overcome the problems created by collisions and the CSMA/CD algorithm by removing the possibility of a collision. Switches do not create a single shared bus, like a hub; they treat each individual physical port as a separate bus. Switches use memory buffers to hold incoming frames as well, so when two attached devices send a frame at the same time, the switch can forward one frame while holding the other frame in a memory buffer, waiting to forward one frame until after the first one has been forwarded. So, as Figure 3-10 illustrates, collisions can be avoided.

In Figure 3-10, both PC1 and PC3 are sending at the same time. The switch looks at the destination Ethernet address and sends the frame sent from PC1 to PC2 at the same instant as the frame is sent by PC3 to PC4. The big difference between the hub and the switch is that

the switch interpreted the electrical signal as an Ethernet frame and processed the frame to make a decision. (The details of Ethernet addressing and framing are coming up in the next two sections.) A hub simply repeats the electrical signal and makes no attempt to interpret the electrical signal (Layer 1) as a LAN frame (Layer 2). So, a hub actually performs OSI Layer 1 functions, repeating an electrical signal, whereas a switch performs OSI Layer 2 functions, actually interpreting Ethernet header information, particularly addresses, to make forwarding decisions.

Buffering also helps prevent collisions. Imagine that PC1 and PC3 both sent a frame to PC4 at the same time. The switch, knowing that forwarding both frames to PC4 would cause a collision, would buffer one frame until the first one has been completely sent to PC4. Two features of switching bring a great deal of improved performance to Ethernet, as compared with hubs:

• If only one device is cabled to each port of a switch, no collisions occur. If no collisions can occur, CSMA/CD can be disabled, solving the Ethernet performance problem.

• Each switch port does not share the bandwidth, but it has its own separate bandwidth, meaning that a switch with a 10-Mbps ports has 10 Mbps of bandwidth per port.

So, LAN switching brings significant performance to Ethernet LANs. The next section covers another topic that effectively doubles Ethernet performance.

Eliminating Collisions to Allow Full-Duplex Ethernet

The original Ethernet specifications used a shared bus, over which only one frame could be sent at any point in time. So, a single device could not be sending a frame and receiving a frame at the same time because it would mean that a collision was occurring. So, devices simply chose not to send a frame while receiving a frame. That logic is called half-duplex logic.

Ethernet switches allow multiple frames to be sent over different ports at the same time. Additionally, if only one device is connected to a switch port, there is never a possibility that a collision could occur. So, LAN switches with only one device cabled to each port of the switch allow the use of full-duplex operation. Full duplex means that an Ethernet card can send and receive concurrently. Consider Figure 3-11, which shows the full-duplex circuitry used with a single PC cabled to a LAN switch.

Full duplex allows the full speed—10 Mbps, in this example—to be used in both directions simultaneously. For this to work, the NIC must disable its loopback circuitry. So far in this chapter, you have read about the basics of 12 years of Ethernet evolution. Table 3-4 summarizes some of the key points as they relate to what is covered in this initial section of the chapter.

Ethernet Data-Link Protocols

One of the most significant strengths of the Ethernet family of protocols is that these protocols use the same small set of data-link protocols. For instance, Ethernet addressing works the same on all the variations of Ethernet, even back to 10BASE5. This section covers most of the details of the Ethernet data-link protocols.

Ethernet Addressing

Ethernet LAN addressing identifies either individual devices or groups of devices on a LAN. Unicast Ethernet addresses identify a single LAN card. Each address is 6 bytes long, is usually written in hexadecimal, and, in Cisco devices, typically is written with periods separating each set of four hex digits. For example, 0000.0C12.3456 is a valid Ethernet address. The term unicast addresses, or individual addresses, is used because it identifies an individual LAN interface card. (The term unicast was chosen mainly for contrast with the terms broadcast, multicast, and group addresses.)

Computers use these addresses to identify the sender and receiver of an Ethernet frame. For instance, imagine that Fred and Barney are on the same Ethernet, and Fred sends Barney a frame. Fred puts his own Ethernet MAC address in the Ethernet header as the source address and uses Barney’s Ethernet MAC address as the destination. When Barney receives the frame, he notices that the destination address is his own address, so Barney processes the frame. If Barney receives a frame with some other device’s unicast address in the destination address field, Barney simply does not process the frame.

The IEEE defines the format and assignment of LAN addresses. The IEEE requires globally unique unicast MAC addresses on all LAN interface cards. (IEEE calls them MAC addresses because the MAC protocols such as IEEE 802.3 define the addressing details.) To ensure a unique MAC address, the Ethernet card manufacturers encode the MAC address onto the card, usually in a ROM chip. The first half of the address identifies the manufacturer of the card. This code, which is assigned to each manufacturer by the IEEE, is called the organizationally unique identifier (OUI). Each manufacturer assigns a MAC address with its own OUI as the first half of the address, with the second half of the address being assigned a number that this manufacturer has never used on another card.

Many terms can be used to describe unicast LAN addresses. Each LAN card comes with a burned-in address (BIA) that is burned into the ROM chip on the card. BIAs sometimes are called universally administered addresses (UAAs) because the IEEE universally (well, at least worldwide) administers address assignment. Regardless of whether the BIA is used or another address is configured, many people refer to unicast addresses as either LAN addresses, Ethernet addresses, or MAC addresses.

Group addresses identify more than one LAN interface card. The IEEE defines two general categories of group addresses for Ethernet:

• Broadcast addresses—The most often used of IEEE group MAC addresses, the broadcast address, has a value of FFFF.FFFF.FFFF (hexadecimal notation). The broadcast address implies that all devices on the LAN should process the frame.

• Multicast addresses—Multicast addresses are used to allow a subset of devices on a LAN

to communicate. Some applications need to communicate with multiple other devices. By sending one frame, all the devices that care about receiving the data sent by that application can process the data, and the rest can ignore it. The IP protocol supports multicasting. When IP multicasts over an Ethernet, the multicast MAC addresses used by IP follow this format: 0100.5exx.xxxx, where any value can be used in the last half of the addresses.

Ethernet Framing — Framing defines how a string of binary numbers is interpreted. In other words, framing defines the meaning behind the bits that are transmitted across a network. The physical layer helps you get a string of bits from one device to another. When the receiving device gets the bits, how should they be interpreted? The term framing refers to the definition of the fields assumed to be in the data that is received. In other words, framing defines the meaning of the bits transmitted and received over a network.

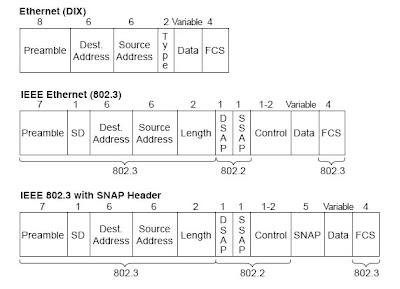

For instance, you just read an example of Fred sending data to Barney over an Ethernet. Fred put Barney’s Ethernet address in the Ethernet header so that Barney would know that the Ethernet frame was meant for Barney. The IEEE 802.3 standard defines the location of the destination address field inside the string of bits sent across the Ethernet. Figure 3-12 shows the details of several types of LAN frames.

Every little field in these frames might not be interesting, but you should at least remember some details about the contents of the headers and trailers. In particular, the addresses and their location in the headers are important. Also, the names of the fields that identify the type of data inside the Ethernet frame—namely, the Type, DSAP, and SNAP fields—are important. Finally, the fact that a FCS exists in the trailer is also vital.

The IEEE 802.3 specification limits the data portion of the 802.3 frame to a maximum of 1500 bytes. The Data field was designed to hold Layer 3 packets; the term maximum transmission unit (MTU) defines the maximum Layer 3 packet that can be sent over a medium. Because the Layer 3 packet rests inside the data portion of an Ethernet frame, 1500 bytes is the largest IP packet allowed over an Ethernet.

Identifying the Data Inside an Ethernet Frame

Each data-link header has a field in its header with a code that defines the type of protocol header that follows. For example, in the first frame in Figure 3-13, the Destination Service Access Point (DSAP) field has a value of E0, which means that the next header is a Novell IPX header. Why is that? Well, when the IEEE created 802.2, it saw the need for a protocol type field that identified what was inside the field called “data” in an IEEE Ethernet frame.

The IEEE called its Type field the destination service access point (DSAP). When the IEEE first created the 802.2 standard, anyone with a little cash could register favorite protocols with the IEEE and receive a reserved value with which to identify those favorite protocols in the DSAP field. For instance, Novell registered IPX and was assigned hex E0 by the IEEE. However, the IEEE did not plan for a large number of protocols—and it was wrong. As it turns out, the 1-byte-long DSAP field is not big enough to number all the protocols.

To accommodate more protocols, the IEEE allowed the use of an extra header, called a Subnetwork Access Protocol (SNAP) header. In the second frame of Figure 3-13, the DSAP field is AA, which implies that a SNAP header follows the 802.2 header, and the SNAP header includes a 2-byte protocol type field. The SNAP protocol type field is used for the same purpose as the DSAP field, but because it is 2 bytes long, all the possible protocols can be identified. For instance, in Figure 3-13, the SNAP type field has a value of 0800, signifying that the next header is an IP header. RFC 1700, “Assigned Numbers” (www.isi.edu/in-notes/ rfc1700.txt), lists the SAP and SNAP Type field values and the protocol types that they imply.

Table 3-6 summarizes the fields that are used for identifying the types of data contained in a frame.

Some examples of values in the Ethernet Type and SNAP Protocol fields are 0800 for IP and 8137 for NetWare. Examples of IEEE SAP values are E0 for NetWare, 04 for SNA, and AA for SNAP. Interestingly, the IEEE does not have a reserved DSAP value for TCP/IP; SNAP headers must be used to support TCP/IP over IEEE Ethernet.

Layer 2 Ethernet Summary

As mentioned earlier in this chapter, physical layer protocols define how to deliver data across a physical medium. Data-link protocols make that physical network useful by defining how and when the physical network is used. Ethernet defines the OSI Layer 1 functions for Ethernet, including cabling, connectors, voltage levels, and cabling distance limitations, as well as many important OSI Layer 2 functions. In this section, four of these data link features were emphasized, as shown in Table 3-7.

Recent Ethernet Standards

In most networks today, you would not use 10BASE2 or 10BASE5—in fact, you probably might not have many 10BASE-T hubs still in your network. More recently created alternatives, such as Fast Ethernet and Gigabit Ethernet, provide faster Ethernet options at reasonable costs. Both have gained widespread acceptance in networks today, with Fast Ethernet most likely being used on the desktop and Gigabit Ethernet being used between networking devices or on servers. Additionally, 10 Gb provides yet another improvement in speed and performance and is covered briefly in Chapter 11.

Fast Ethernet

Fast Ethernet, as defined in IEEE 802.3u, retains many familiar features of 10-Mbps IEEE 802.3 Ethernet variants. The age-old CSMA/CD logic still exists, but it can be disabled for full-duplex point-to-point topologies in which no collisions can occur. The 802.3u specification calls for the use of the same old IEEE 802.3 MAC and 802.2 LLC framing for the LAN headers and trailers. A variety of cabling options is allowed—unshielded and shielded copper cabling as well as multimode and single-mode fiber. Both Fast Ethernet shared hubs and switches can be deployed.

Two of the key features of Fast Ethernet, as compared to 10-Mbps Ethernet, are higher bandwidth and autonegotiation. Fast Ethernet operates at 100 Mbps—enough said. The other key difference, autonegotiation, allows an Ethernet card or switch to negotiate dynamically to discover whether it should use either 10 or 100 Mbps. So, many Ethernet cards and switch ports are called 10/100 cards or ports today because they can autonegotiate the speed. The endpoints autonegotiate whether to use half duplex or full duplex as well. If autonegotiation fails, it settles for half-duplex operation at 10 Mbps.

The autonegotiation process has been known to fail. Cisco recommends that, for devices that seldom move, such as servers and switches, you should configure the LAN switch and the device to use the identical desired setting instead of depending on autonegotiation. Cisco recommends using autonegotiation for switch ports connected to end-user devices because these devices are moved frequently relative to servers or other network devices, such as routers.

Gigabit Ethernet

The IEEE defines Gigabit Ethernet in standards 802.3z for optical cabling and 802.3ab for electrical cabling. Like Fast Ethernet, Gigabit Ethernet retains many familiar features of slower Ethernet variants. CSMA/CD still is used and can be disabled for full-duplex support. The 802.3z and 802.3ab standards call for the use of the same old IEEE 802.3 MAC and 802.2 LLC framing for the LAN headers and trailers. The most likely place to use Gigabit is between switches, between switches and a router, and between a switch and a server.

Gigabit Ethernet is similar to its slower cousins in several ways. The most important similarity is that the same Ethernet headers and trailers are used, regardless of whether it’s 10 Mbps, 100 Mbps, or 1000 Mbps. If you understand how Ethernet works for 10 and 100 Mbps, then you know most of what you need to know about Gigabit Ethernet. Gigabit Ethernet differs from the slower Ethernet specifications in how it encodes the signals onto the cable. Gigabit Ethernet is obviously faster, at 1000 Mbps, or 1 Gbps.

Foundation Summary

The “Foundation Summary” section of each chapter lists the most important facts from the chapter. Although this section does not list every fact from the chapter that will be on your CCNA exam, a well-prepared CCNA candidate should know, at a minimum, all the details in each “Foundation Summary” section before going to take the exam.

Table 3-8 lists the various protocol specifications for the original three IEEE LAN standards

Figure 3-14 depicts the cabling and basic operation of an Ethernet network built with 10BASE-T cabling and an Ethernet hub.

Figures 3-15 and 3-16 show Ethernet straight-through and crossover cabling.

Full-duplex Ethernet cards can send and receive concurrently. Figure 3-17 shows the full- duplex circuitry used with a single PC cabled to a LAN switch.

Table 3-9 summarizes some of the key points as they relate to what is covered in this initial section of the chapter.

Figure 3-18 shows the details of several types of LAN frames.

Table 3-10 summarizes the fields that are used for identifying the types of data contained in a frame.

Ethernet also defines many important OSI Layer 2 functions. In this chapter, four of these features were emphasized, as shown in Table 3-11.

=================================================

Quiz4: Data Link Layer Fundamentals: Ethernet LA

1) (1 marks)

Which of the following is true about the Ethernet FCS field?

It is used for error recovery.

It is 2 bytes long.

It resides in the Ethernet trailer, not the Ethernet header.

It is used for encryption.

None of the above.

Leave blank

2) (1 marks)

Which of the following is true about Ethernet crossover cables?

Pins 1 and 2 are reversed on the other end of the cable.

Pins 1 and 2 connect to pins 3 and 6 on the other end of the cable.

Pins 1 and 2 connect to pins 3 and 4 on the other end of the cable.

The cable can be up to 1000 m to cross over between buildings.

None of the above.

Leave blank

3) (1 marks)

Which of the following are true about the CSMA/CD algorithm?

The algorithm never allows collisions to occur.

Collisions can happen, but the algorithm defines how the computers should notice

a collision and how to recover.

The algorithm works only with two devices on the same Ethernet.

None of the above.

Leave blank

4) (1 marks)

Which of the following are part of the functions of OSI Layer 2 protocols?

Framing

Delivery of bits from one device to another

Addressing

Error detection

Defining the size and shape of Ethernet cards

Leave blank

5) (1 marks)

Which of the following would be a collision domain?

All devices connected to an Ethernet hub

All devices connected to an Ethernet switch

Two PCs, with one cabled to a router Ethernet port with a crossover cable, and the

other PC cabled to another router Ethernet port with a crossover cable.

None of the above

Leave blank

1 comments:

Post a Comment